Data310_workbook

Response 3

1) Last time you did an exercise (convolutions and pooling) where you manually applied a 3x3 array as a filter to an image of two people ascending an outdoor staircase. Modify the existing filter and if needed the associated weight in order to apply your new filters to the image 3 times. Plot each result, upload them to your response, and describe how each filter transformed the existing image as it convolved through the original array and reduced the object size. What are you functionally accomplishing as you apply the filter to your original array (see the following snippet for reference)? Why is the application of a convolving filter to an image useful for computer vision? Stretch goal: instead of using the misc.ascent() image from scipy, can you apply three filters and weights to your own selected image? Again describe the results.

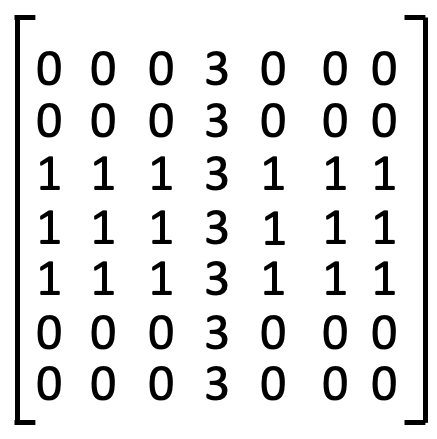

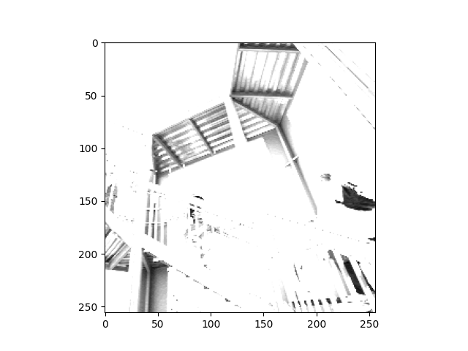

Filter 1

filter = [ [0, 1, 0], [1, -4, 1], [0, 1, 0]]

This filter emphasizes the horizontal lines and edges in the image.

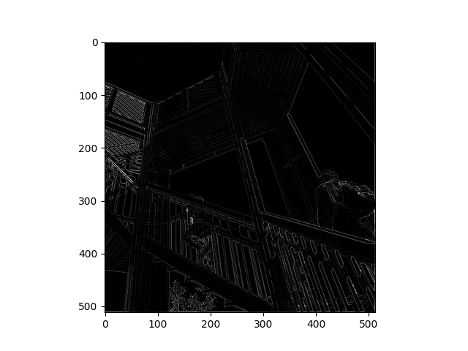

Filter 2

filter = [ [-1, -2, -1], [0, 0, 0], [1, 2, 1]]

This filter emphasizes the vertical lines in the image.

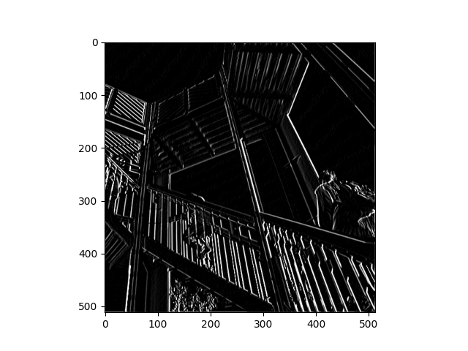

Filter 2

filter = [ [-1, 2, 1], [-2, 1, 2], [-1, 2, 1]]

This filter emphasizes the shadows in the image, like underneath the stairs and the walkway.

Convolving an image is useful for computer vision because it allows the user to choose the aspects of an image that are the most important and then have the computer emphasize those features. Then, when we need to run a model, the prominent features filtered in the picture arrays will be easier for the model to identify.

My image: Bloodroot Flower

I ran the convolution with the same 3 filters as the mnist stairs picture.

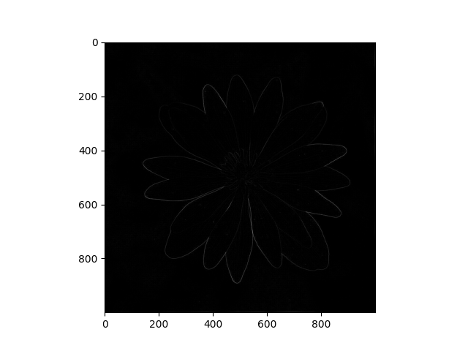

Filter 1

This filter seems to emphasize the very outline of the flower. There is less contrast in this image between the background and the flower (compared to all of the contrast in the mnist picture), which means that the filter took away more of the detail.

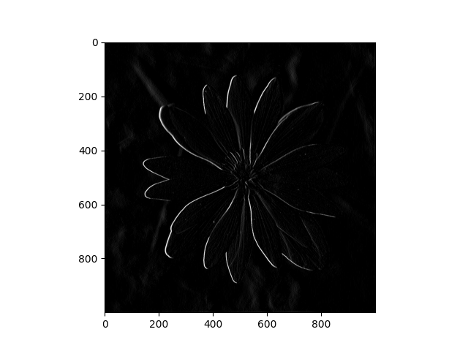

Filter 2

This filter does a better job of emphasizing the individual petals of the flower, but it also picks up on the sticks in the background of the picture, so it might not be as useful for identifying petals. It is the only filter to highlight the center of the flower, though.

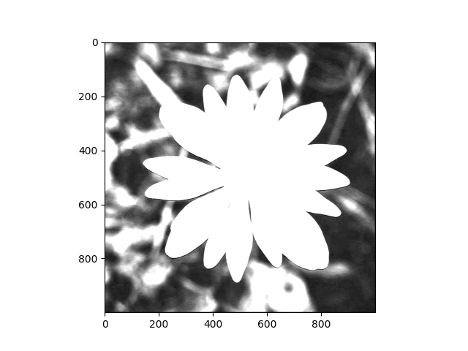

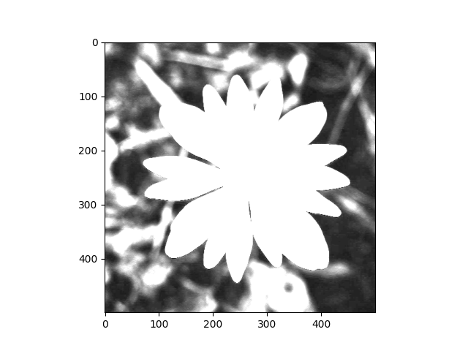

Filter 3

This filter emphasizes the sticks in the background of the picture, which aligns with the shadows identified in the mnist picture.

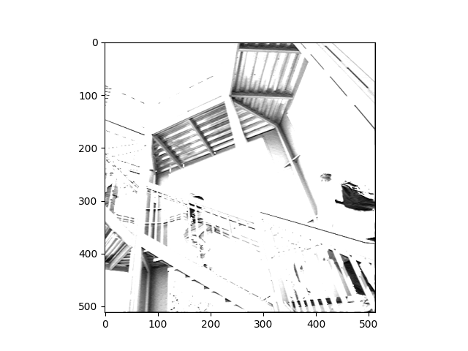

2) Another useful method is pooling. Apply a 2x2 filter to one of your convolved images, and plot the result. In effect what have you accomplished by applying this filter? Does there seem to be a logic (i.e. maximizing, averaging or minimizing values?) associated with the pooling filter provided in the example exercise (convolutions & pooling)? Did the resulting image increase in size or decrease? Why would this method be useful? Stretch goal: again, instead of using misc.ascent(), apply the pooling filter to one of your transformed images.

Pooling on Mnist Image

Pooling on Bloodroot Flower

Pooling makes the image smaller (condenses) which makes it easier for computers to process, while still maintaining the most important aspects of the image. Usually in pooling, or at least as explained in the ML video, the values are maximized, but the for loops that complete the pooling seem to be choosing every other value in the array, which would be a more randomized pooling of the image.

3) Convolve the 3x3 filter over the 9x9 matrix and provide the resulting matrix. link to matrices

The resulting matrix is an 7x7 grid, because the edge values cannot be filtered.