Data310_workbook

Response 2

1. In the video, First steps in computer vision, Laurence Maroney introduces us to the Fashion MNIST data set and using it to train a neural network in order to teach a computer “how to see.” One of the first steps towards this goal is splitting the data into two groups, a set of training images and training labels and then also a set of test images and test labels. Why is this done? What is the purpose of splitting the data into a training set and a test set?

The data is split into testing and training sets because the neural network needs to be trained to identify which images are shoes. The testing set is used to then test the model of the neural network on images from the same specifications to see the accuracy of the neural network on images it has never encountered.

2. The fashion MNIST example has increased the number of layers in our neural network from 1 in the past example, now to 3. The last two are .Dense layers that have activation arguments using the relu and softmax functions. What is the purpose of each of these functions. Also, why are there 10 neurons in the third and last layer in the neural network.

The relu function sets any value that is less than zero equal to zero because negative values can skew the dataset by cancelling out positive values in other parts of the dataset. The softmax function helps the neural network find the most likely candidate by setting the largest neuron to 1 and the rest to zero. There are 10 neurons in the last layer because there were 10 different types of clothing. Each neuron calculates the probability of an item of clothing fitting into each particular class.

3. In the past example we used the optimizer and loss function, while in this one we are using the function adam in the optimizer argument and sparse_categorical- crossentropy for the loss argument. How do the optimizer and loss functions operate to produce model parameters (estimates) within the model.compile() function?

The loss function calculates how good or bad an answer is, while the optimizer adjusts the values of the neurons to run the model again. The loss has to be categorical because the neural network is classifying multiple things.

4. Using the mnist drawings dataset (the dataset with the hand written numbers with corresponding labels) answer the following questions.

a. What is the shape of the images training set (how many and the dimension of each)?

28x28, 60,000 images

b. What is the length of the labels training set?

10 classes, 60,000 labels

c. What is the shape of the images test set?

28x28, 10,000 images

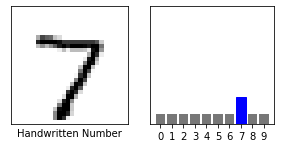

d. Estimate a probability model and apply it to the test set in order to produce the array of probabilities that a randomly selected image is each of the possible numeric outcomes (look towards the end of the basic image classification exercises for how to do this — you can apply the same method applied to the Fashion MNIST dataset but now apply it to the hand written letters MNIST dataset).

classifications = model.predict(x_test)

print(classifications[0])

[2.0541737e-09 8.0926675e-08 2.8054221e-06 4.3037235e-05 5.7184557e-12 3.7644522e-08 2.2514300e-13 9.9995327e-01 3.1266808e-07 4.5281493e-07]

5. Use np.argmax() with your predictions object to return the numeral with the highest probability from the test labels dataset.

7

6. Produce a plot of your selected image and the accompanying histogram that illustrates the probability of that image being the selected number